Since the near beginning of genome-wide association studies, the PLINK software package (developed by Shaun Purcell’s group at the Broad Institute and MGH) has been the standard for manipulating the large-scale data produced by these studies. Over the course of its development, numerous features and options were added to enhance its capabilities, but it is best known for the core functionality of performing quality control and standard association tests.

Nearly 10 years ago (around the time PLINK was just getting started), the CHGR Computational Genomics Core (CGC) at Vanderbilt University started work on a similar framework for implementing genotype QC and association tests. This project, called PLATO, has stayed active primarily to provide functionality and control that (for one reason or another) is unavailable in PLINK. We have found it especially useful for processing ADME and DMET panel data – it supports QC and association tests of multi-allelic variants.

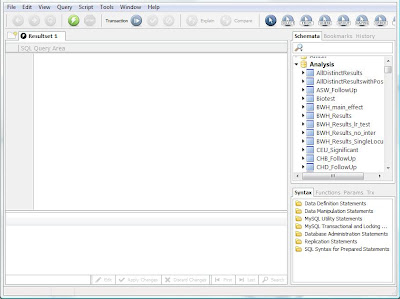

PLATO runs via command line interface, but accepts a batch file that allows users to specify an order of operations for QC filtering steps. When running multiple QC steps in a single run of PLINK, the order of application is hard-coded and not well documented. As a result, users wanting this level of control must run a sequence of PLINK commands, generating new data files at each step leading to longer compute times and disk usage. PLATO also has a variety of data reformatting options for other genetic analysis programs, making it easy to run EIGENSTRAT, for example.

The detail of QC output from each of the filtering steps is much greater in PLATO, allowing output per group (founders only, parents only, etc), and giving more details on why samples fail sex checks, Hardy-Weinberg checks, and Mendelian inconsistencies to facilitate deeper investigation of these errors. And with family data, disabling samples due to poor genotype quality retains pedigree information useful for phasing and transmission tests. Full documentation and download links can be found here (https://chgr.mc.vanderbilt.edu/plato). Special thanks to Yuki Bradford in the CGC for her thoughts on this post.